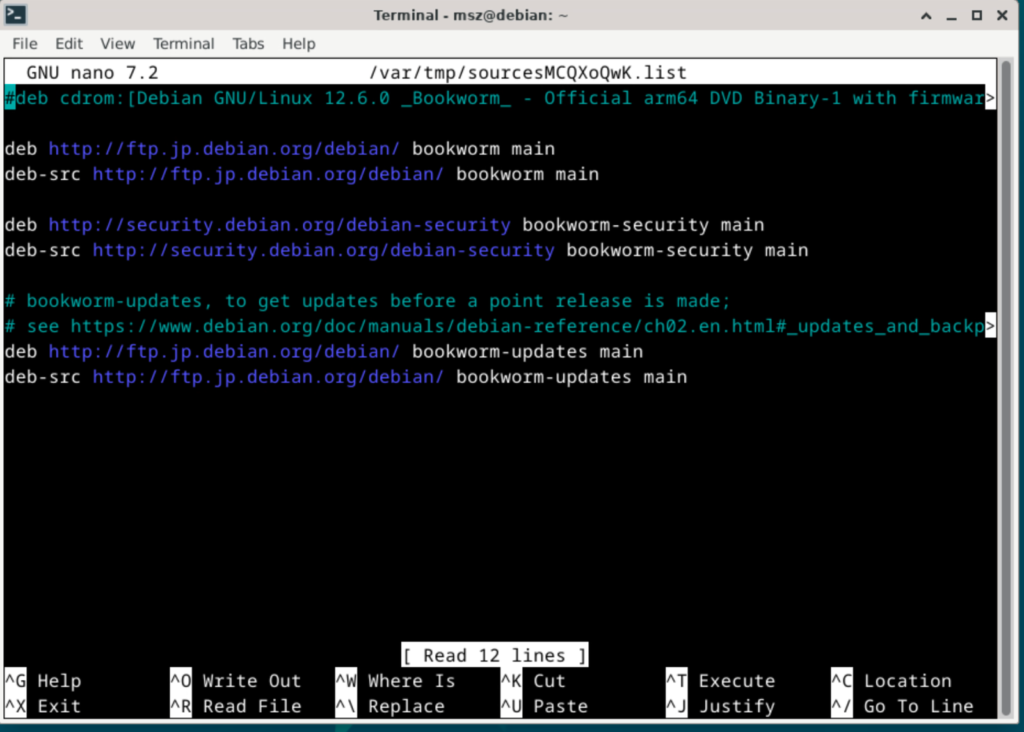

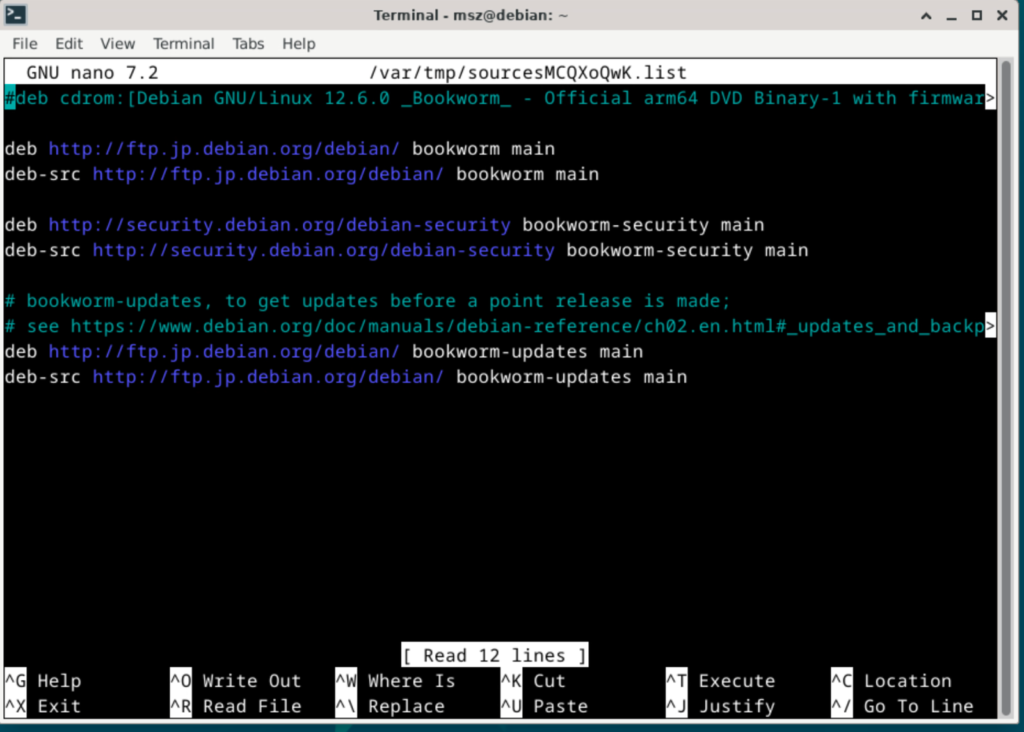

Run the following to open the editor for

sudoedit /etc/apt/sources.listAnd comment out the first line by adding # to the beginning

Problem solved!

Run the following to open the editor for

sudoedit /etc/apt/sources.listAnd comment out the first line by adding # to the beginning

Problem solved!

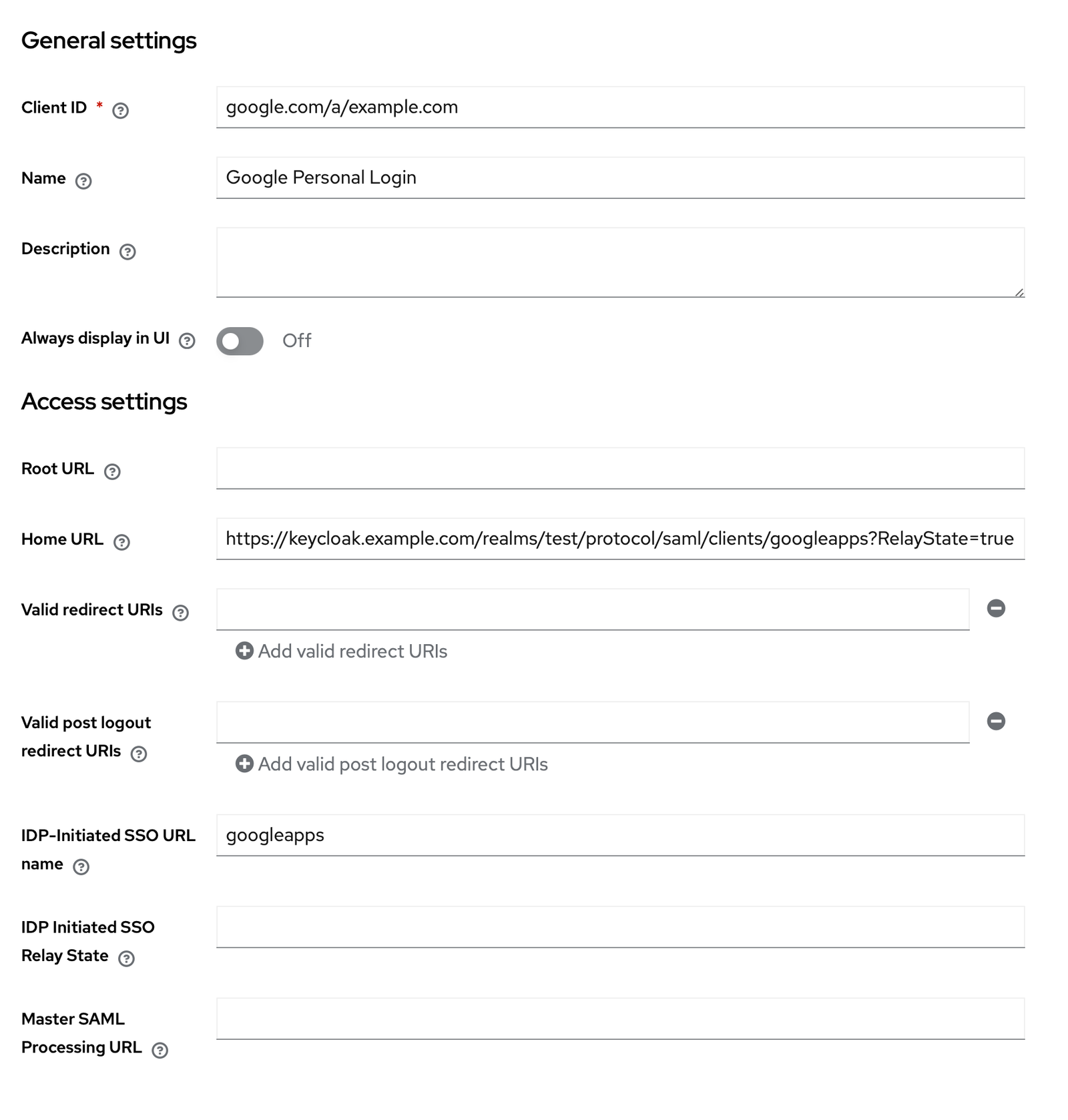

This guide talks about setting your enterprise Keycloak as a way your employee can log into their Google Workspace.

Most of the guides online talk about how to use Google as an identity provider for KeyCloak. The only one I found about using Keycloak as identity provider to Google was outdated and did not work. So I wrote this article.

First, create a new SAML application using the following config:

Remember to replace example.com with your Keycloak domain.

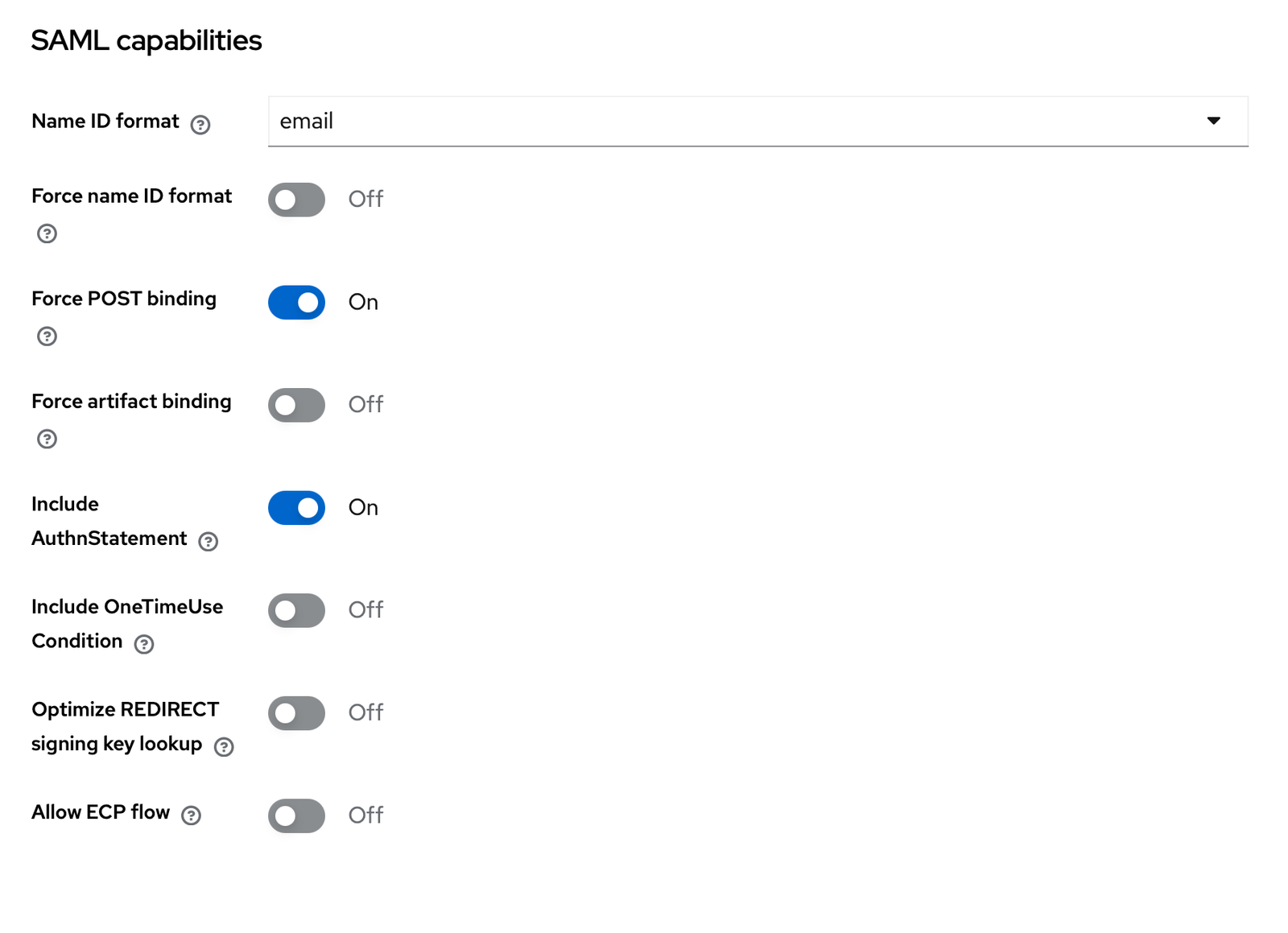

Now, Google requires an email mapping. This means, if you have an account in Google Workspace with email [email protected], you will set the email property of that user in Keycloak to be [email protected] and set the below SAML capabilities / Name ID format to email.

The rest of the configuration can stay untouched.

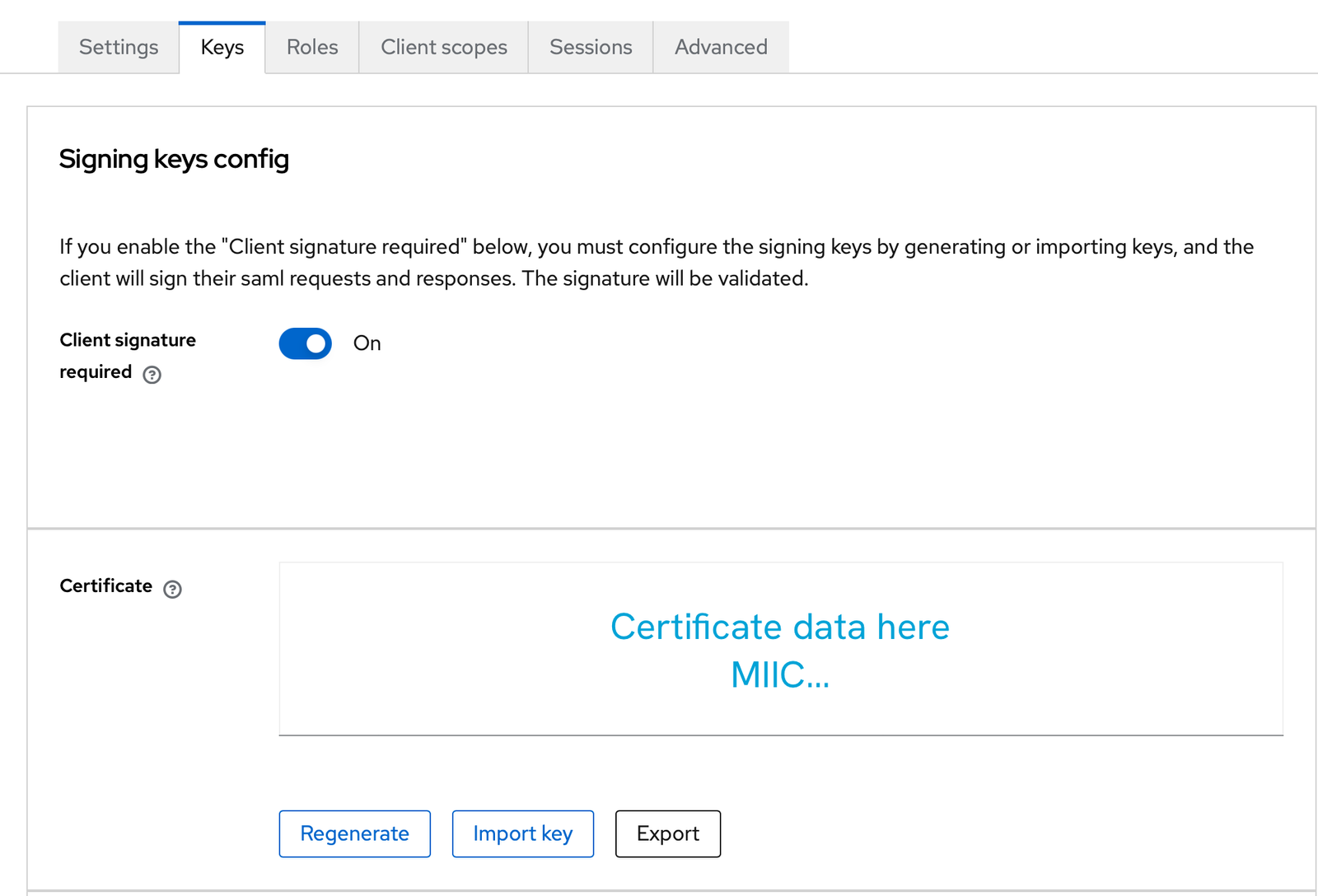

After creating the application, open it, and go to the Keys tab to copy the certificate to a new file called saml_pub_cert.pem

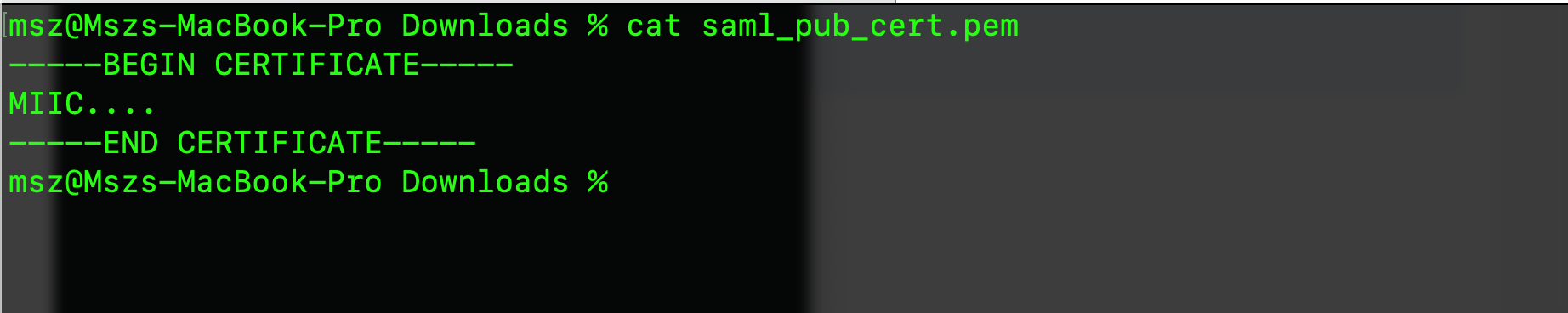

Now, in the file, make sure you include --—BEGIN CERTIFICATE----- in the beginning and --—END CERTIFICATE----- at the end of the line. Like this:

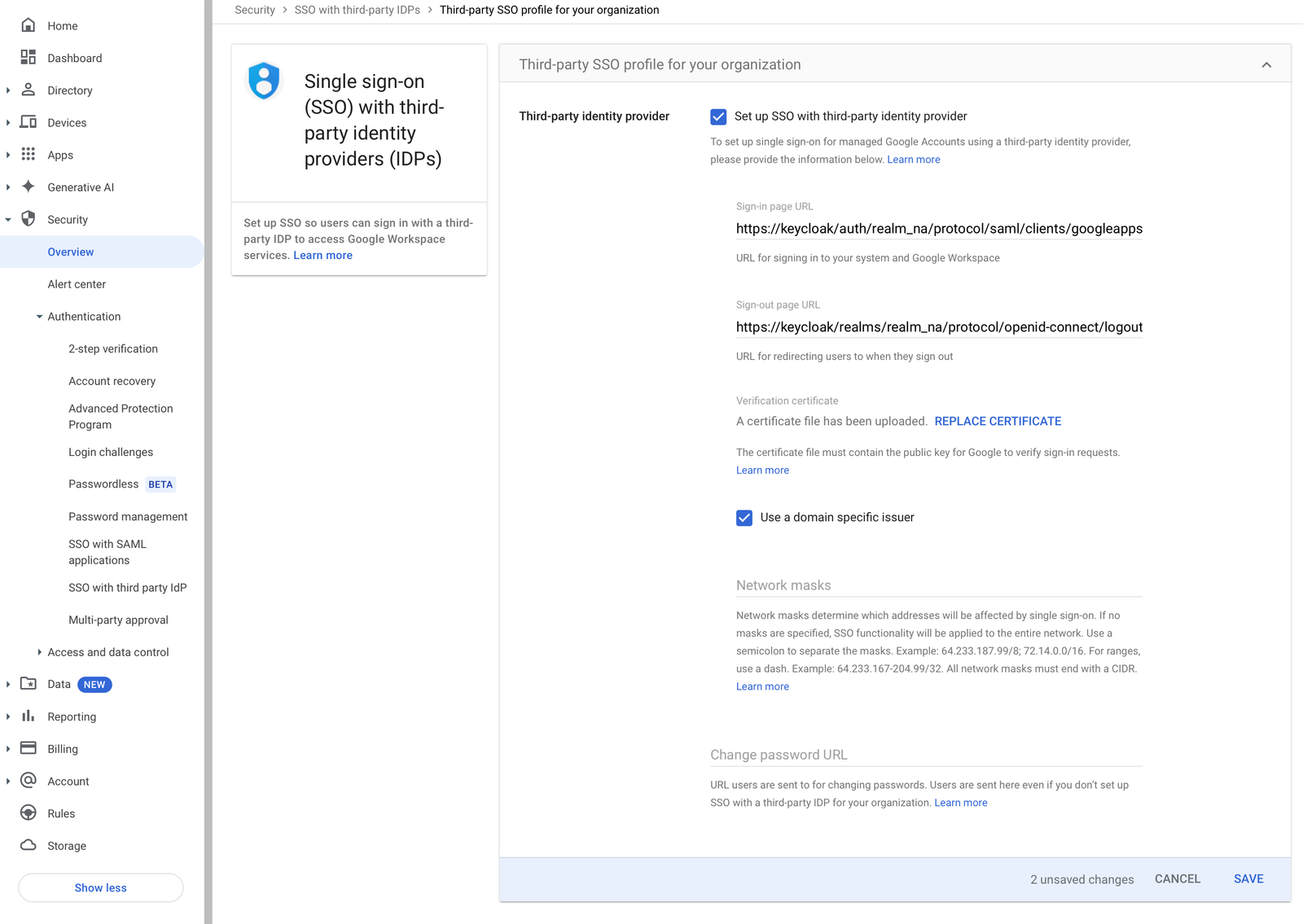

Go to your Google admin portal. And go to the Security >

SSO with third-party IDPs.

On the tap, tap to create a profile in the Third-party SSO profile for your organization section:

For the certificate file, upload your saml_pub_crt.pem

Save the changes.

Now, access this URL:

https://your_keycloak_domain.example.com/realms/your_realm/protocol/saml/clients/googleapps?RelayState=true

And you should be able to login.

There might be an issue where after logging in, it shows this page:

While I have not figured how to solve this yet. You are already logged in. You can navigate to google.com or gmail and you will see that you have logged in via Keycloak identity.

In the newest Mastodon V4.3.0, you only need to modify one file in order for the maximum character count to be changed.

If you search for the max_characters keyword in the Mastodon Github repository, you will see that it all points to the value within the StatusLengthValidator and a variable called MAX_CHARS

To modify it, first, ssh into your Mastodon server, and switch to the root shell:

sudo -sThen, switch to the Mastodon user

su - mastodonNow, modify the validator file:

nano -w live/app/validators/status_length_validator.rbAt the very top of the file, you will see the MAX_CHARS variable, which was by default 500, you can modify it to another integer, for example 3000 to allow a maximum of 3000 characters within each post.

# frozen_string_literal: true

class StatusLengthValidator < ActiveModel::Validator

MAX_CHARS = 3000

URL_PLACEHOLDER_CHARS = 23

URL_PLACEHOLDER = 'x' * 23That’s how you do it! Now, exit to root shell and restart the Mastodon processes, or simply reboot.

mastodon@Mastodon:~$ exit

logout

root@Mastodon:/home/ubuntu# systemctl restart mastodon*

root@Mastodon:/home/ubuntu# You can read the custom character count from the /api/v1/instance endpoint, and find the character count in the Root/configuration/statuses/max_chatacters as an integer.

As I am working on a brand new Discord-styled modern Matrix client, I went into an issue: because I use the newest Matrix Rust SDK, it requires Sliding Sync (as does the new Element X app).

Now, the original article includes guides on setting up a separate sliding sync proxy. But, since the newest Matrix Synapse already support native sliding sync. I am just going to give you my docker compose file and my Nginx file:

services:

app:

image: dotwee/matrix-synapse-s3

restart: always

ports:

- 8008:8008

volumes:

- /var/docker_data/matrix:/data

depends_on:

- postgres

postgres:

image: postgres:13

container_name: postgres

environment:

POSTGRES_DB: syncv3

POSTGRES_USER: postgres

POSTGRES_PASSWORD: ${DATABASE_PASSWORD}

volumes:

- postgres_data:/var/lib/postgresql/data

sliding_sync:

image: ghcr.io/matrix-org/sliding-sync:latest

container_name: sliding_sync

depends_on:

- postgres

- app

ports:

- "8009:8009"

environment:

SYNCV3_SERVER: "http://app:8008"

SYNCV3_DB: "postgres://postgres:${DATABASE_PASSWORD}@postgres:5432/syncv3?sslmode=disable"

SYNCV3_SECRET: ${SYNCV3_SECRET}

SYNCV3_BINDADDR: ":8009"

volumes:

postgres_data:If you already have a docker compose of Matrix Synapse running, run docker compose pull to update all the containers to the latest version.

Also, generate the .env file for your docker containers

touch .env

echo "SYNCV3_SECRET=$(openssl rand -hex 32)" > .env

echo "DATABASE_PASSWORD=$(openssl rand -hex 32)" >> .envIf you have installed Synapse using other methods, update according to the documentation.

(with push service and Matrix instance server):

server {

root /var/www/html;

server_name m1rai.net push.mszdev.com chat-web-hook.mszdev.com;

location / {

index index.html index.htm index.html inde.php;

}

location ~* ^(\/_matrix\/push) {

proxy_pass http://localhost:7183;

proxy_set_header X-Forwarded-For $remote_addr;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header Host $host;

}

location ~* ^(\/_matrix|\/_synapse\/client) {

# note: do not add a path (even a single /) after the port in `proxy_pass`,

# otherwise nginx will canonicalise the URI and cause signature verification

# errors.

proxy_pass http://localhost:8008;

proxy_set_header X-Forwarded-For $remote_addr;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header Host $host;

# Nginx by default only allows file uploads up to 1M in size

# Increase client_max_body_size to match max_upload_size defined in homeserver.yaml

client_max_body_size 50M;

}

location /.well-known/matrix/client {

alias /var/www/html/.well-known/matrix/client;

default_type application/json;

}

location /.well-known/matrix/server {

alias /var/www/html/.well-known/matrix/server;

default_type application/json;

}

listen 80;

listen [::]:443 ssl;

listen 443 ssl;

ssl_certificate /home/azureuser/chat.crt;

ssl_certificate_key /home/azureuser/chat.key;

}It should look like this, but with all hostname replaced:

msz@Mszs-MacBook-Pro ~ % curl https://matrix.org/.well-known/matrix/client

{

"m.homeserver": {

"base_url": "https://matrix-client.matrix.org"

},

"m.identity_server": {

"base_url": "https://vector.im"

},

"org.matrix.msc3575.proxy": {

"url": "https://slidingsync.lab.matrix.org"

}

}If your sliding sync proxy `org.matrix.msc3575.proxy` runs on the same server, set it to be the same as your `m.homeserver`

If you’re hosting your website on CloudFlare, you should use a CloudFlare worker to respond the well known file:

const HOMESERVER_URL = "https://matrix.org";

const IDENTITY_SERVER_URL = "https://vector.im";

const FEDERATION_SERVER = "matrix-federation.matrix.org:443";

const SLIDING_PROXY_URL = "https://slidingsync.lab.matrix.org";

export default {

async fetch(request, env) {

const path = new URL(request.url).pathname;

const headers = new Headers({ "Content-Type": "application/json" });

switch (path) {

case "/.well-known/matrix/client":

return new Response(

JSON.stringify({

"m.homeserver": {

"base_url": HOMESERVER_URL

},

"m.identity_server": {

"base_url": IDENTITY_SERVER_URL

},

"org.matrix.msc3575.proxy": {

"url": SLIDING_PROXY_URL

}

}, null, 4),

{ headers }

);

case "/.well-known/matrix/server":

return new Response(

JSON.stringify({

"m.server": FEDERATION_SERVER

}, null, 4),

{ headers }

);

default:

return new Response("Invalid request", { headers });

}

},

};If you’re using Nginx, you should provide a custom well known file, and write the rule to read all well known requests from your given path.

location /.well-known/matrix/client {

alias /var/www/html/.well-known/matrix/client;

default_type application/json;

}

location /.well-known/matrix/server {

alias /var/www/html/.well-known/matrix/server;

default_type application/json;

}Here is the example content of the client file located at `/var/www/html/.well-known/matrix/client`

{

"m.homeserver": {

"base_url": "https://matrix-client.matrix.org"

},

"m.identity_server": {

"base_url": "https://vector.im"

},

"org.matrix.msc3575.proxy": {

"url": "https://slidingsync.lab.matrix.org"

}

}Here is the example content of the server file located at `/var/www/html/.well-known/matrix/server`

{ "m.server": "matrix-federation.matrix.org:443" }Don’t forget to apply the updated configuration file:

systemctl nginx reloadThis article talks about setting up your own Bluesky personal data server, so you will hold your follow data, post data, and medias on your own server.

With proper setup, you will be able to follow people on other At Proto instances (like bsky.social); and people on bsky.social can follow you.

We will use CloudFlare tunnel to prevent your server IP address from getting exposed. This will also avoid the need for a public IPv4 address for your server (so you can easily set up a PDS server on your Raspberry Pie)

To use the tunnel feature, you can register a free CloudFlare account. Using CloudFlare also helps you to prevent some attacks.

Make sure your server meets the requirement:

Server Requirements

Operating System: Ubuntu 22.04

Memory (RAM): 2+ GB

CPU Cores: 2+

Storage: 40+ GB SSD

Architectures: amd64, arm64

I recommend you to use an ARM server (like t4g.small).

Then, ssh into your server and run the following commands to update your packages

apt-get update

apt-get upgrade –with-new-pkgs -y

Before starting this step, you need to add your domain to CloudFlare. You need to point your NS record to CloudFlare.

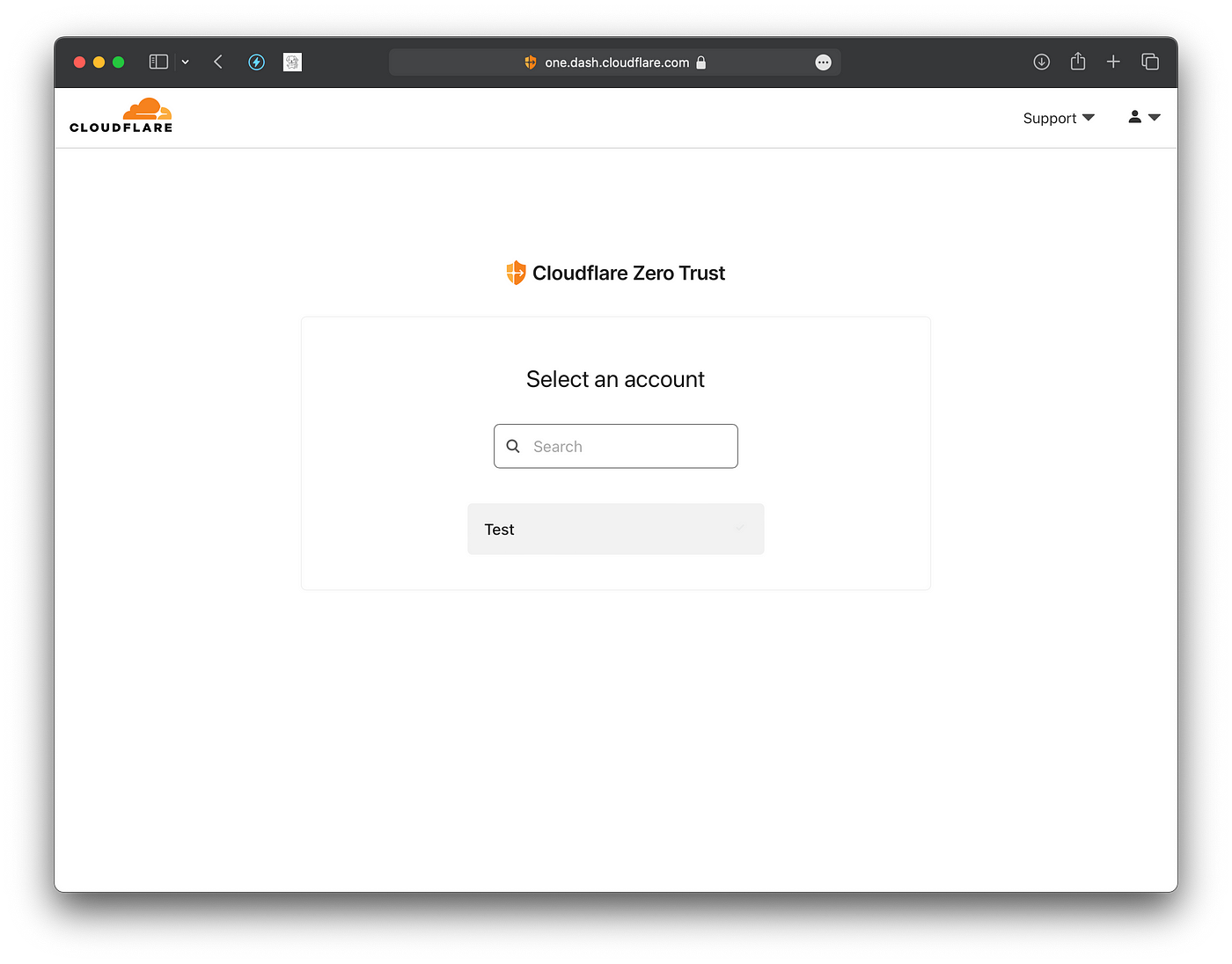

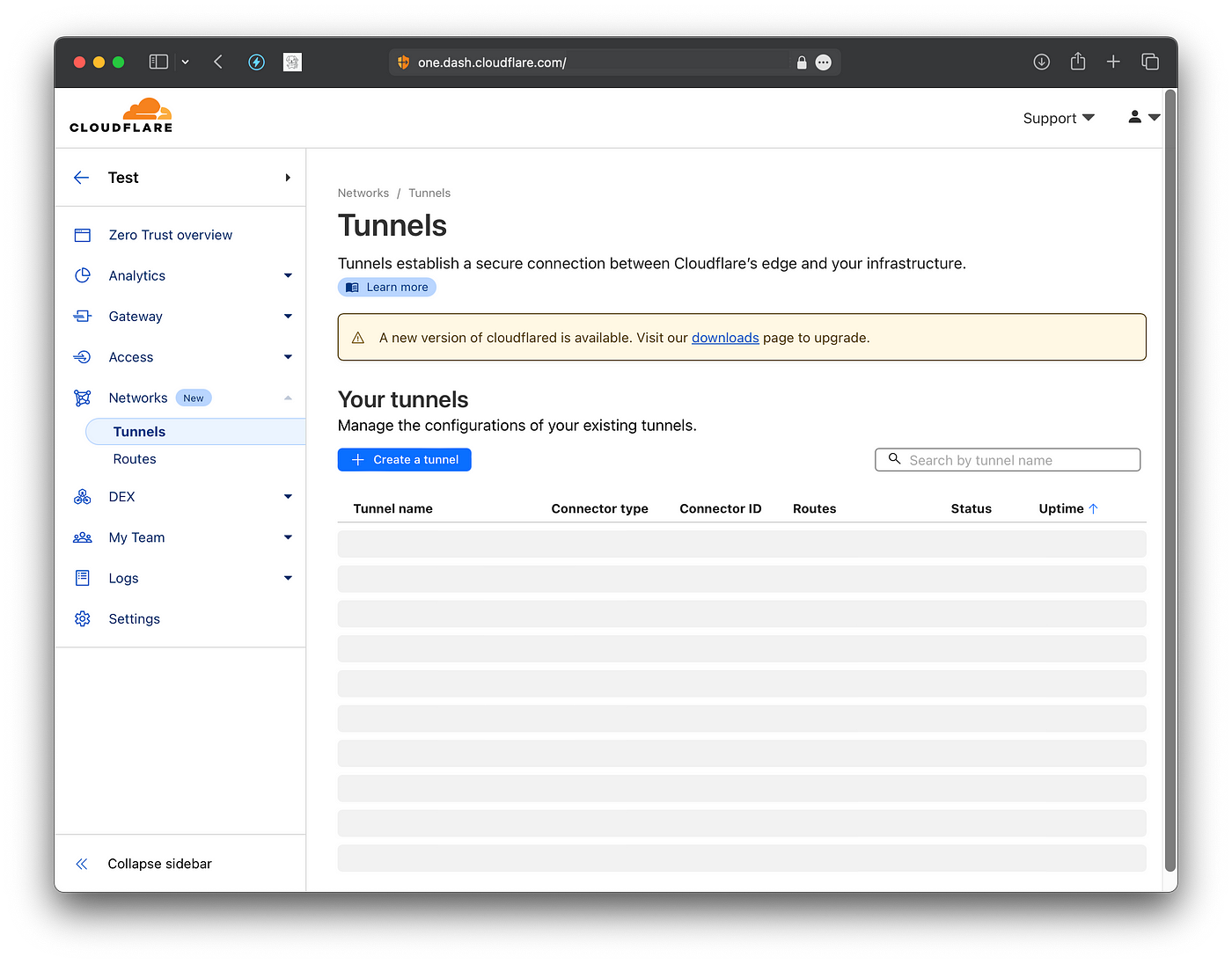

First, access one.dash.cloudflare.com and log into your CloudFlare account. Tap into your CloudFlare zero trust account:

Then, open the “Tunnels” page in the “Networks” tab, and click on “Create a tunnel”

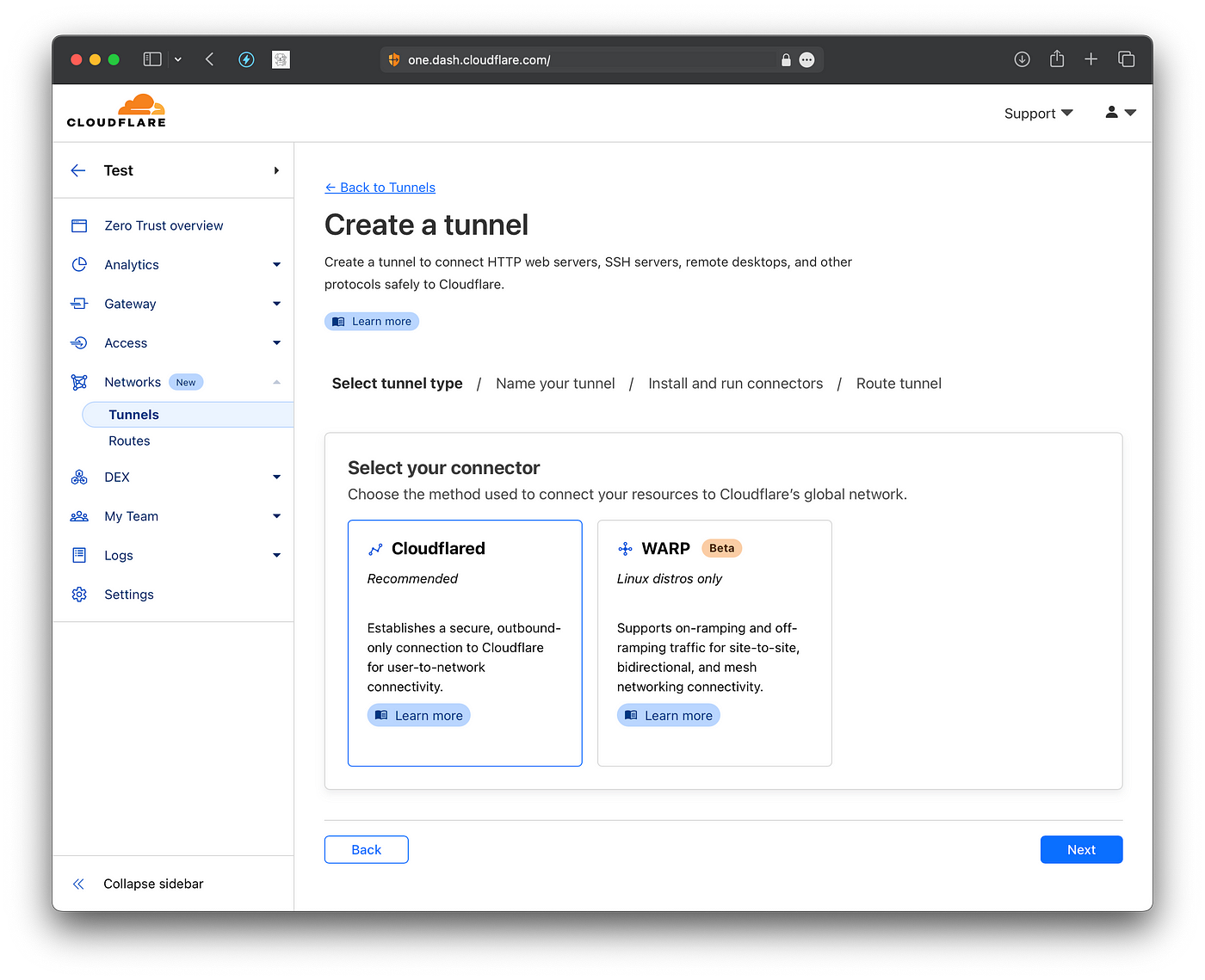

Click to use Cloudflared

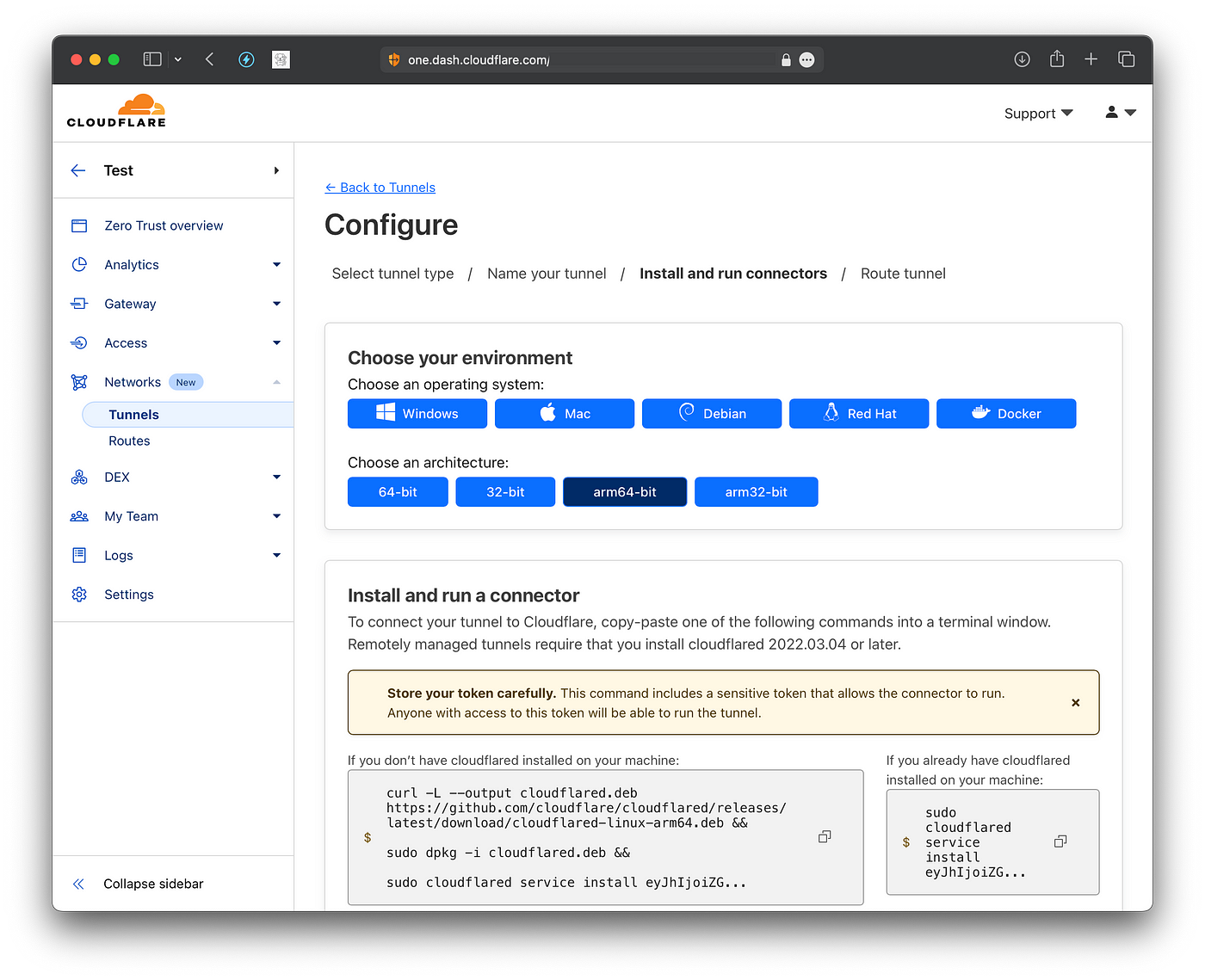

Now, you should tap on “Debian” (since we are using Ubuntu on our server), and tap your CPU type (if you use ARM, tap on arm64-bit )

Copy the command with the curl, dpkg, and cloudflard commands into your terminal, this will connect your server automatically to the CloudFlare network.

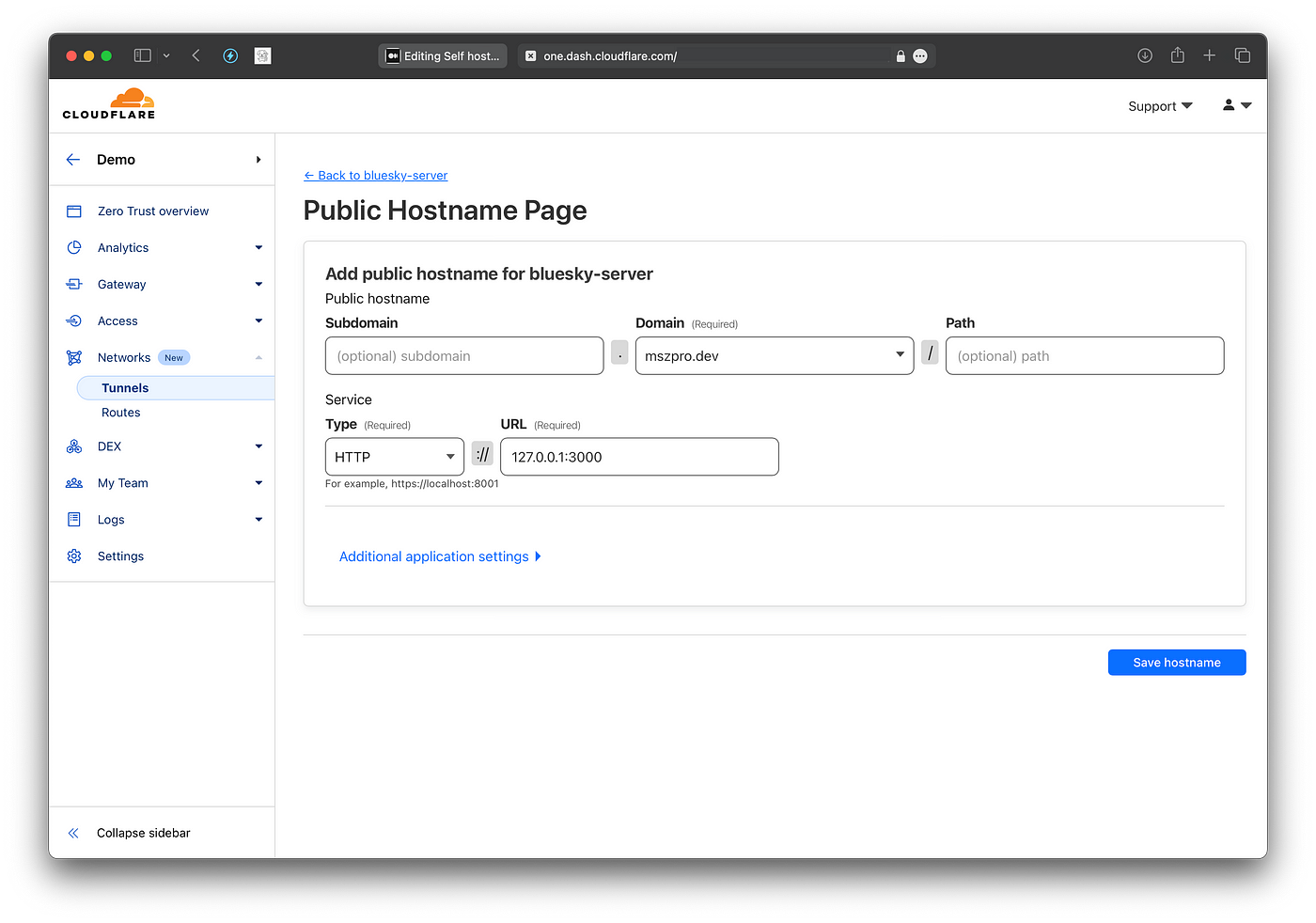

Next, you will add your domain and map it to HTTP://127.0.0.1:3000. By default, the Bluesky PDS uses port 3000 on your local machine with HTTP.

Do not worry about HTTPS, CloudFlare will automatically issue the certificate.

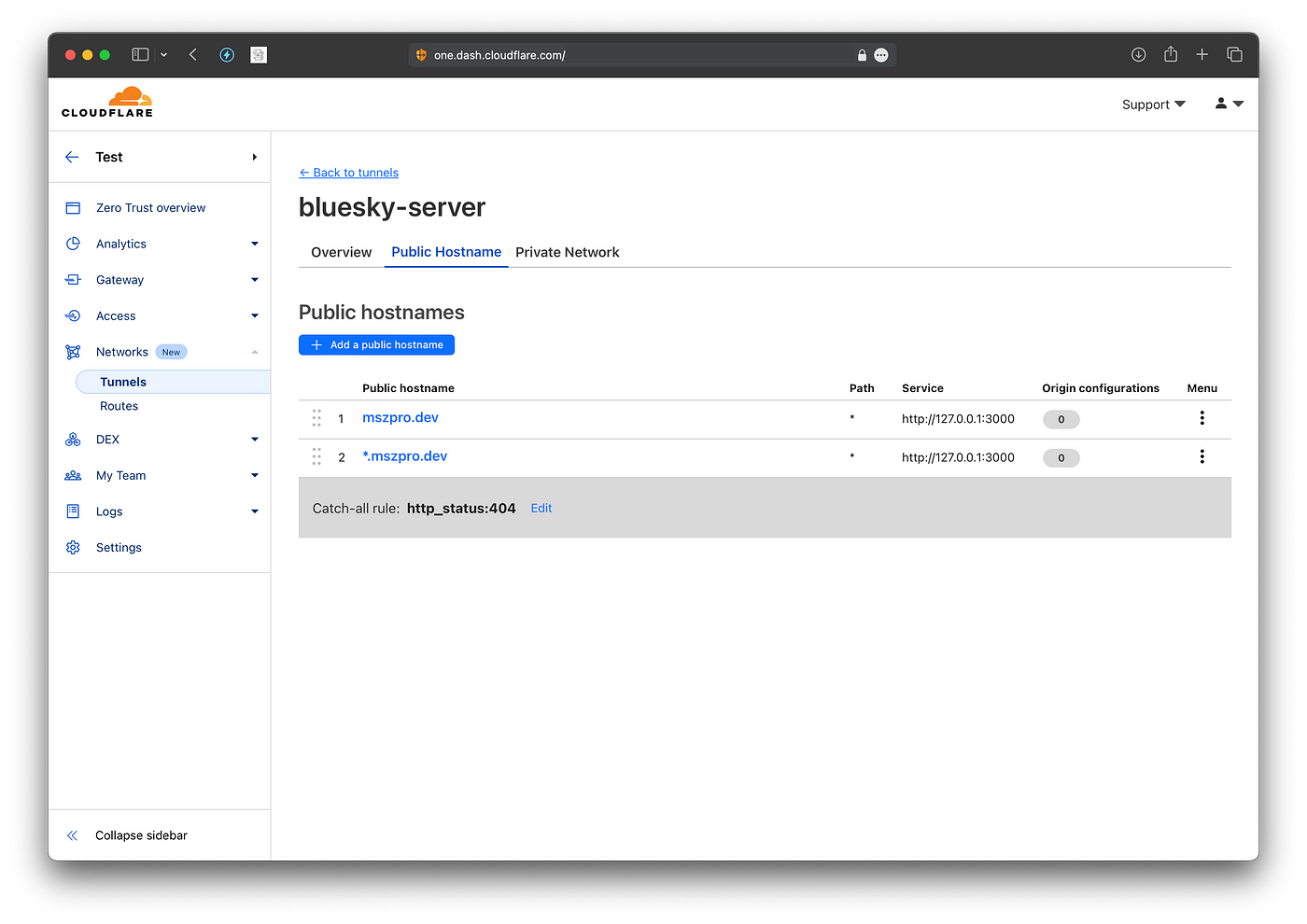

Now, click to open the tunnel entry (in my case, it is called bluesky-server ) Go to the Public Hostname tab, and add a new entry for the wildcard subdomain (since in Bluesky, handles are in the format of domain names, and need to resolve to your server).

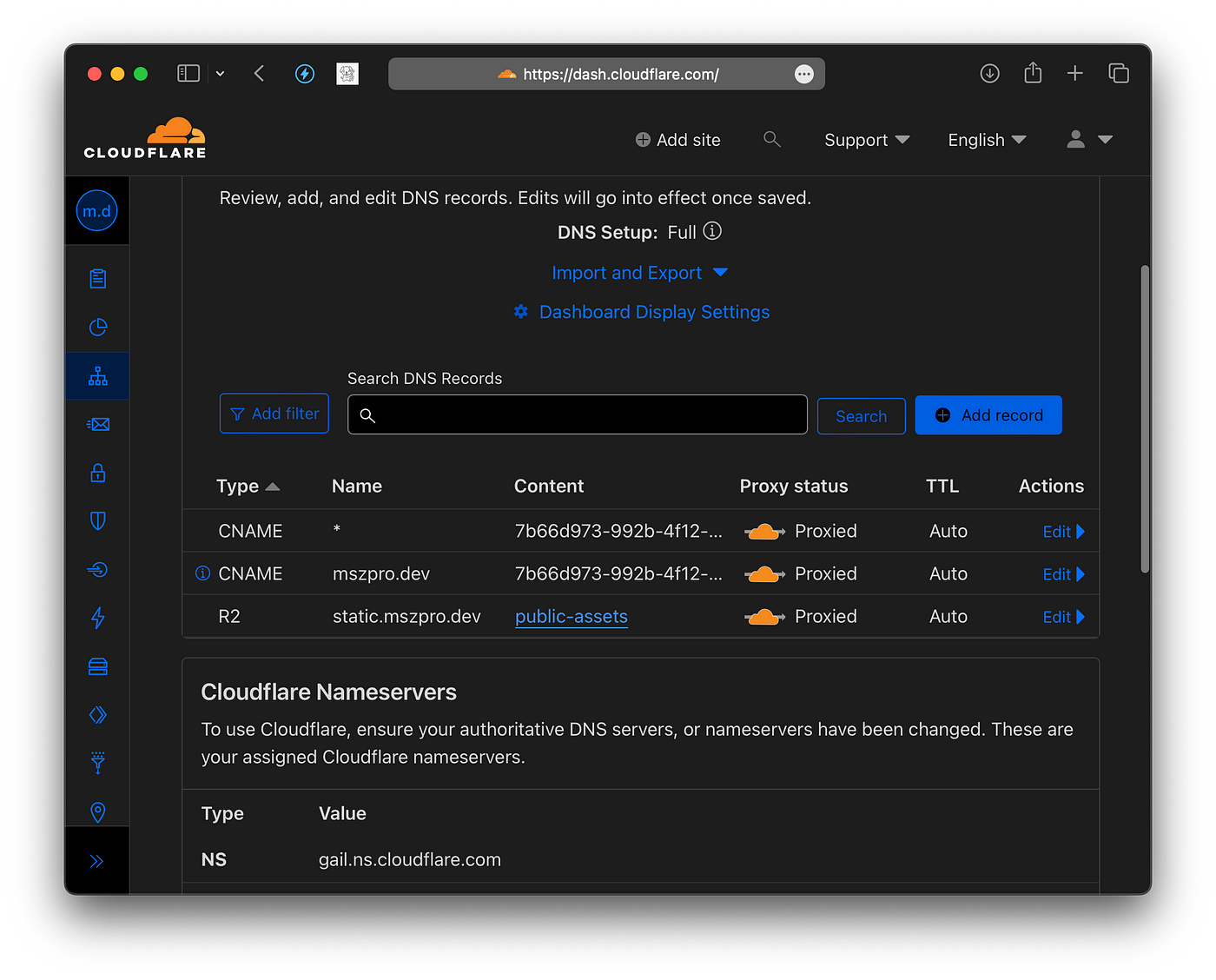

Since CloudFlare does not create DNS record for wildcard tunnel entries, you need to go to CloudFlare dashboard, go to the DNS tab, and add the wildcard CNAME entry (with the key as * mark, and the value the same as the one for the root domain record)

Now, CloudFlare tunnel is setup. Once we set up Bluesky PDS server, you should be able to access your instance via your domain.

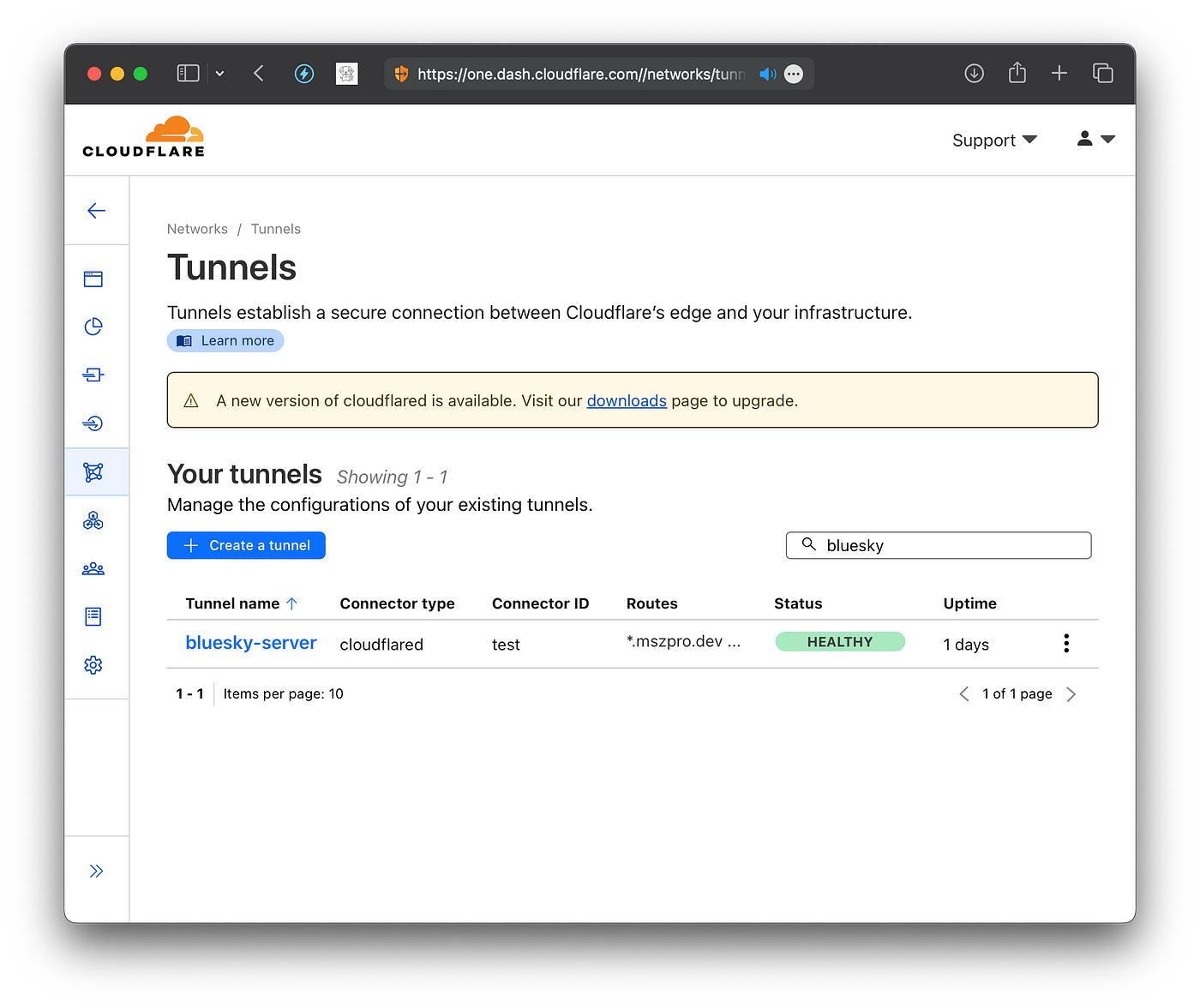

You can also visit the tunnel list on CloudFlare dashboard to check your server status. It should say Healthy

First, download the installation script:

wget https://raw.githubusercontent.com/bluesky-social/pds/main/installer.shThen, make it executable and run it.

chmod +x installer.sh

sudo ./installer.shThat’s it. The above script will install the server and run it using docker.

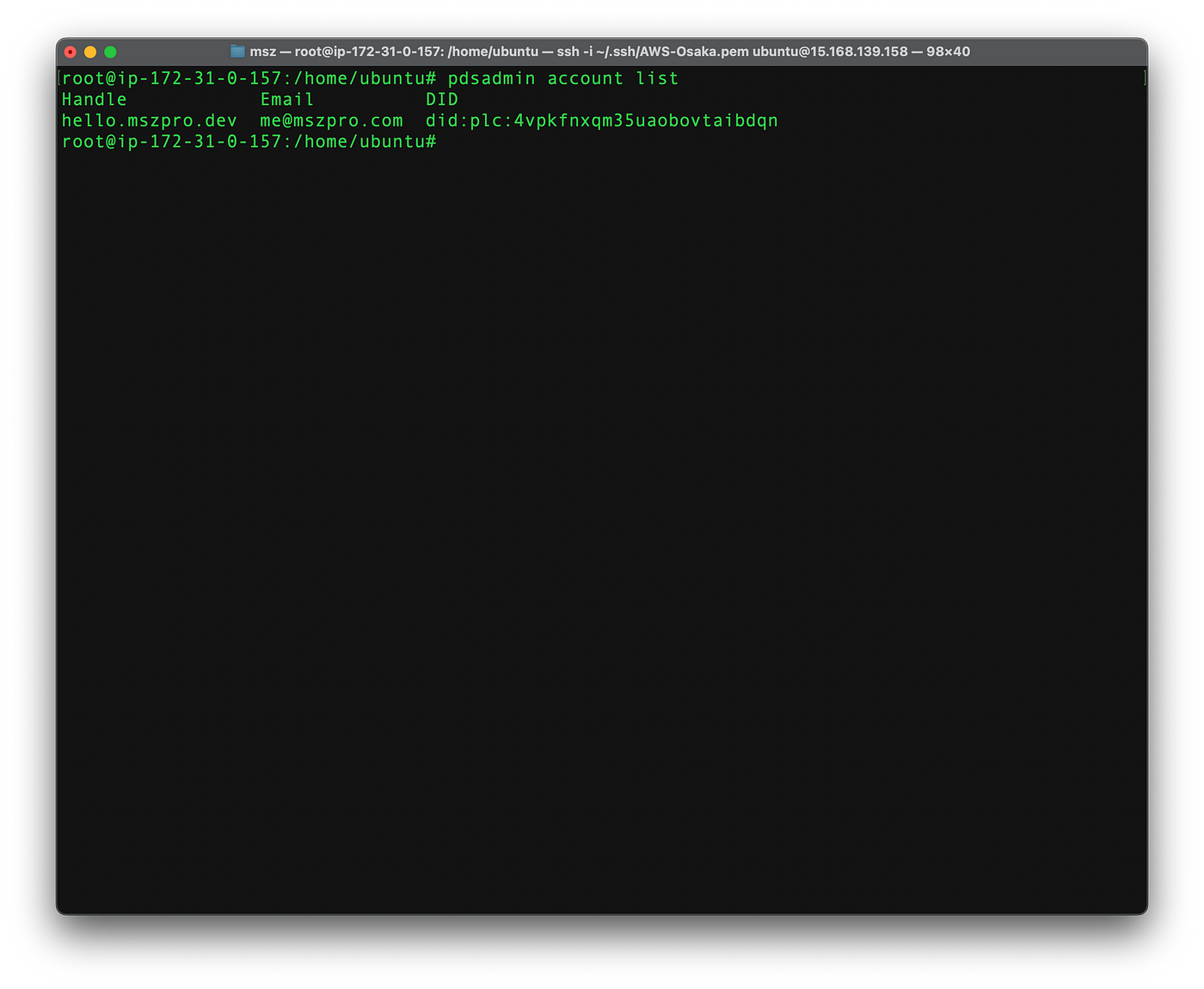

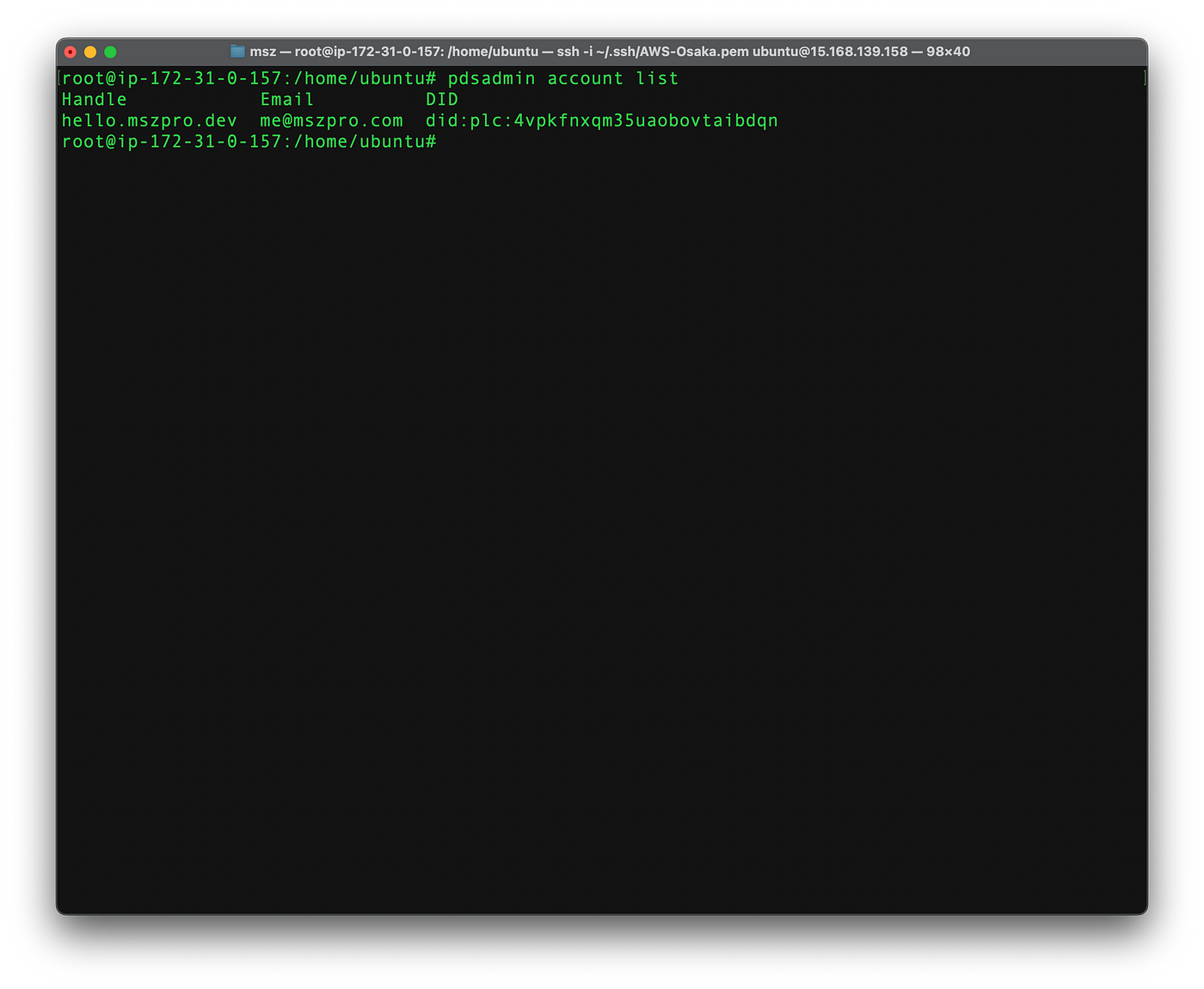

You can create a new account using the bash command:

sudo pdsadmin account createYou can also create an invite code:

sudo pdsadmin create-invite-codeDo notice that you can only have up to 10 accounts if you want to federate with the main Bluesky instance. As stated on Bluesky PDS discord:

The Bluesky Relay will rate limit PDSs in the network. Each PDS will be able to have up to 10 accounts, and produce up to 1500 events/hr and 10,000 events/day. This phase of federation is intended for developers and self-hosters, and we do not yet support larger service providers.

So be careful not to create many accounts.

After adding your account, you might find that your profile cannot be accessed. For me, it works after I rebooted my server.

Currently, you need to register your PDS with Bluesky team.

Initially to join the network you’ll need to join the AT Protocol PDS Admins Discord and register the hostname of your PDS. We recommend doing so before bringing your PDS online. In the future, this registration check will not be required.

The application is easy. You join the Discord group, submit a form, and the Bluesky team should add your instance within about a day.

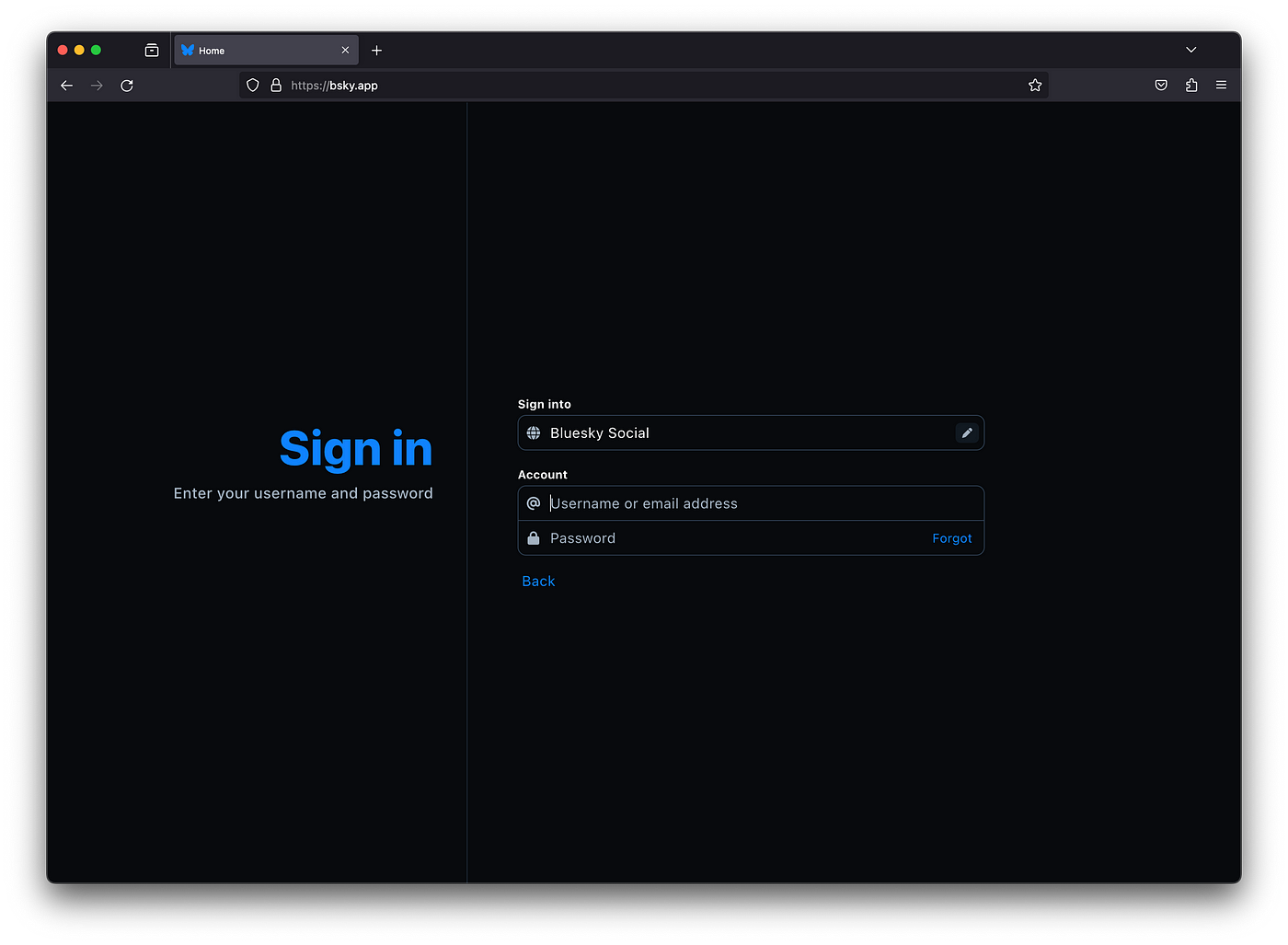

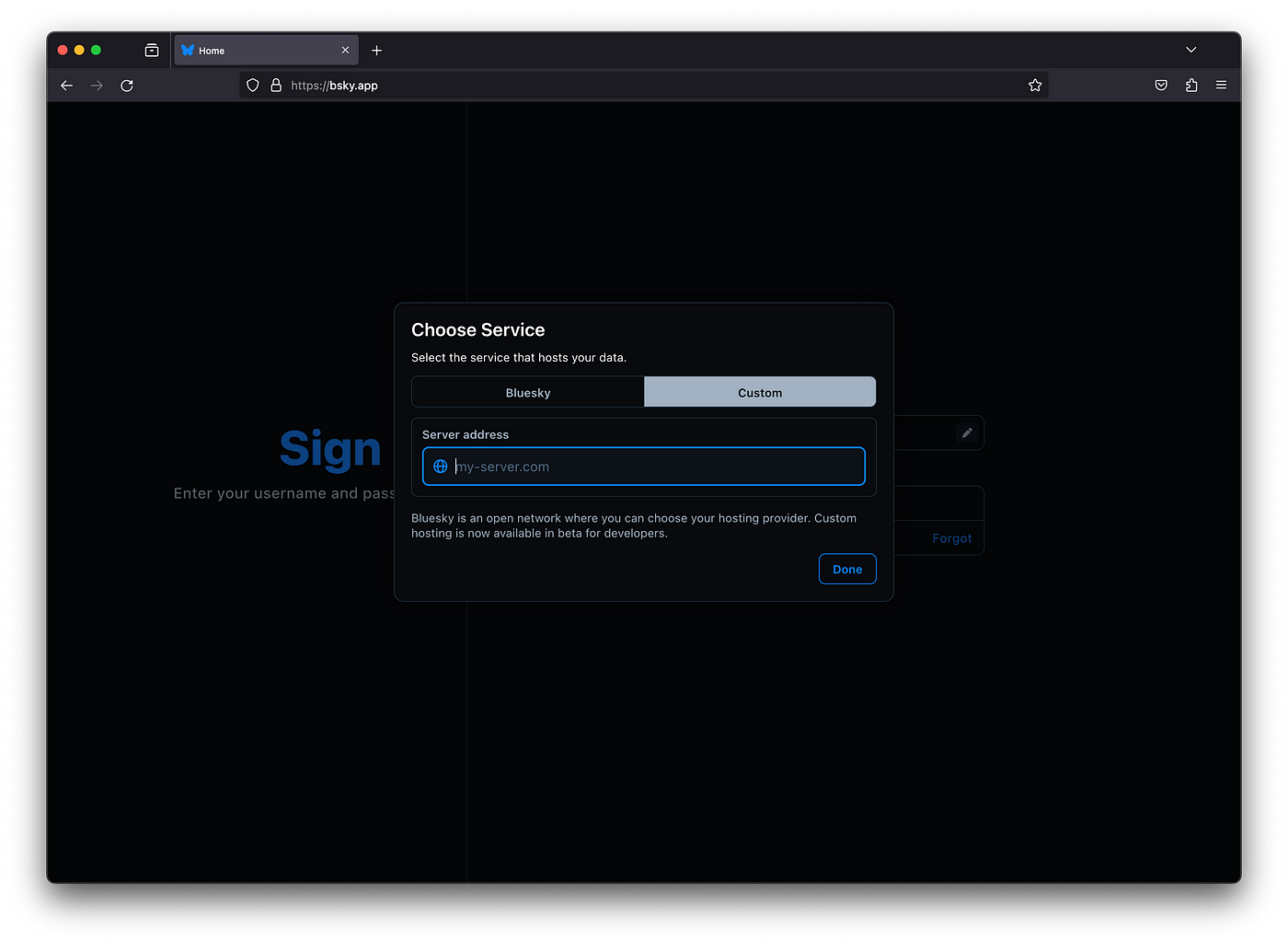

You can log into your instance by using bsky.app, and entering your server hostname manually.

First tap on the pencil icon in the sign into section:

And you can enter your hostname, like mszpro.dev in my example.

Before your request is accepted, you will not be able to follow others on the main Bluesky network; and your account cannot be found from Bluesky social.

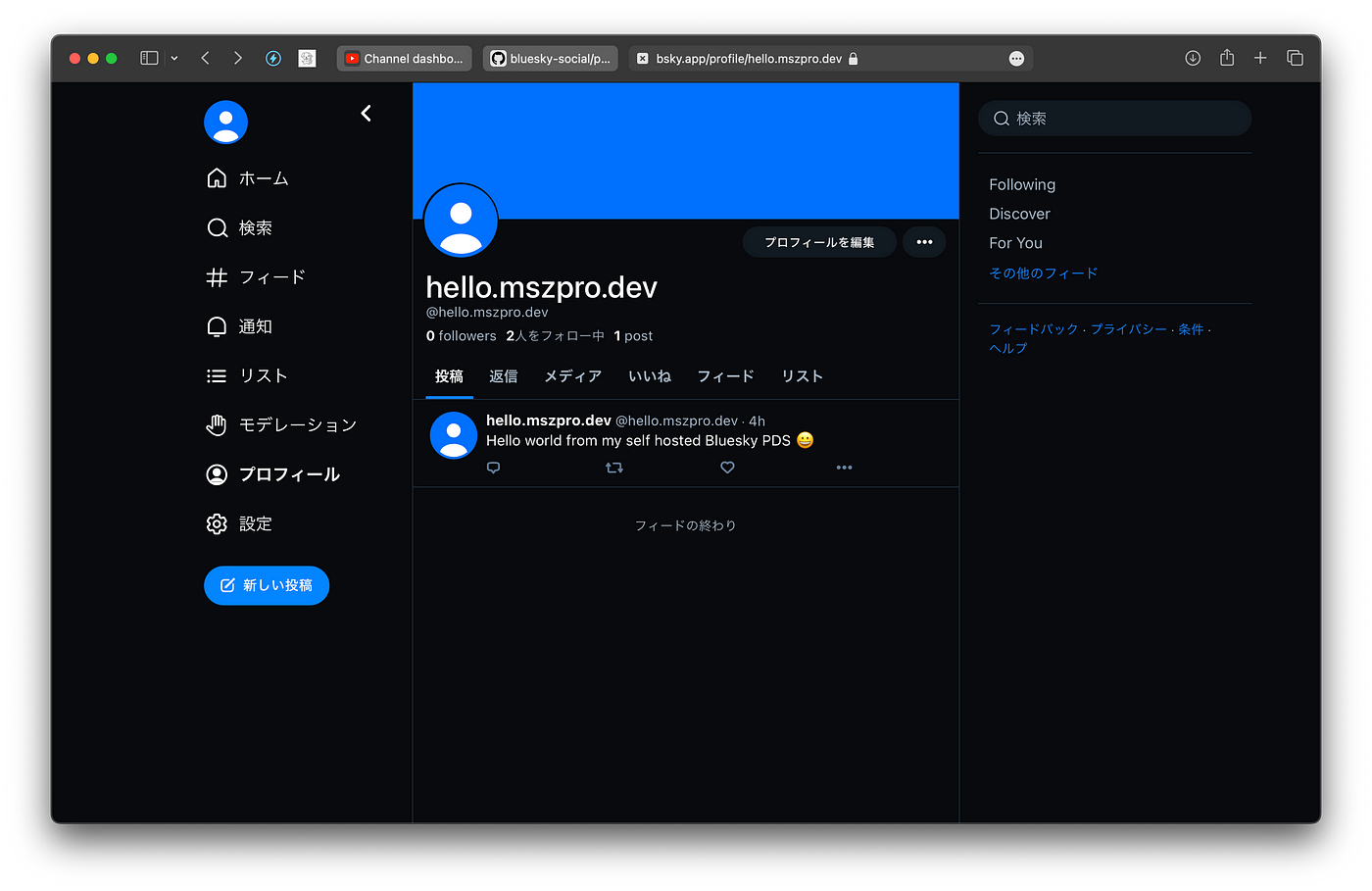

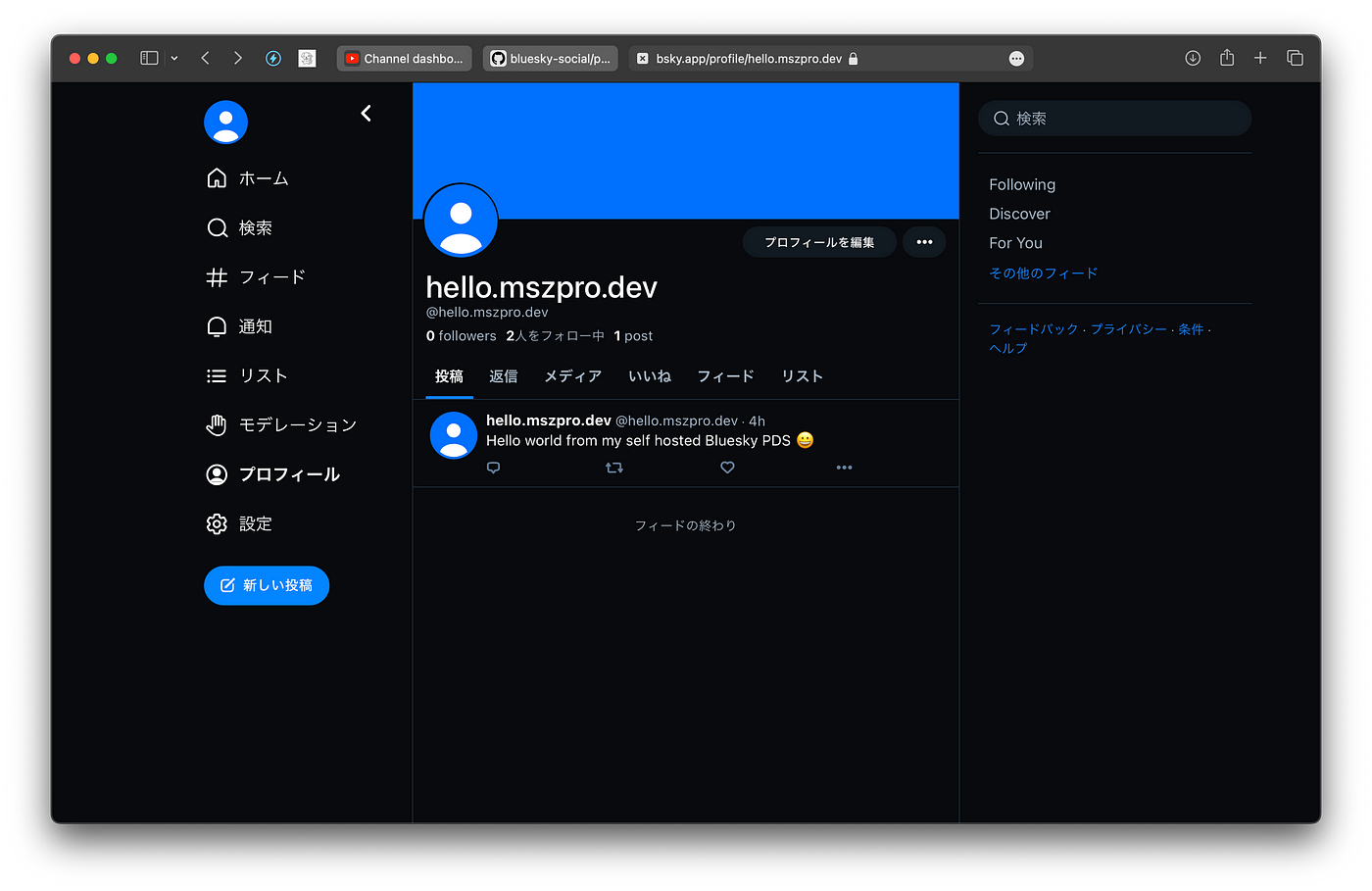

After about 6 hours. My request was approved. And I can access my profile on my own self hosted server from bsky.social; and I can follow people on bsky.social from my own self hosted server:

Here are some useful commands:

Check service status : sudo systemctl status pds

Watch service logs : sudo docker logs -f pds

Backup service data : /pds

PDS Admin command : pdsadmin

I have indie developed a Fediverse app for Mastodon, Misskey, Bluesky all in one. Featured in a TechCrunch article too!